7 Advanced Tools: NotebookLM and Deep Research

Tentative time: 12 minutes

7.1 Learning Objectives

By the end of this section, you will be able to:

- Understand RAG technology and how it enables analysis of large document collections

- Use NotebookLM effectively for multi-document analysis with proper citations

- Leverage Deep Research tools for comprehensive exploratory research

- Recognize the limitations of current AI research tools and plan accordingly

- Integrate advanced tools into your research workflow strategically

7.2 Why These Tools Matter

The literature review techniques we just covered work well for individual papers or small sets of documents. But what about when you need to analyze patterns across dozens or hundreds of documents? Or when you want to do comprehensive exploratory research on a new topic?

This is where advanced AI tools become transformative. They’re not just faster—they enable entirely new kinds of research that were previously impossible for individual researchers or small teams.

7.3 NotebookLM: Your Multi-Document Research Assistant

7.3.1 What Is NotebookLM?

NotebookLM is Google’s specialized tool for working with large document collections. Unlike regular chatbots that rely on their training data, NotebookLM creates a custom knowledge base from documents you upload.

Key Capabilities:

- Upload up to 300 documents (PDFs, Word docs, web pages, etc.)

- Ask questions across your entire document collection

- Get answers with specific citations to source material

- Click citations to see the exact text that supports each claim

- Create structured summaries of patterns across documents

7.3.2 The Technology Behind It: RAG Explained

RAG stands for “Retrieval Augmented Generation.” Here’s how it works in simple terms:

Step 1: Document Chunking Your documents are broken into smaller chunks (usually a few paragraphs each).

Step 2: Vector Mapping Each chunk is converted into a mathematical representation called a “vector” that captures its meaning. Think of this as creating a map where similar content clusters together.

Step 3: Semantic Search When you ask a question, the system finds the chunks most relevant to your question by looking for similar vectors.

Step 4: Context Assembly The most relevant chunks are assembled and fed to the LLM as context for generating your answer.

Step 5: Citation Generation The system keeps track of which chunks came from which documents, enabling precise citations.

Why This Matters: Vector search finds content based on meaning, not just exact word matches. This is a huge advantage over traditional “Control + F” searching, which only finds specific phrases. The AI can find relevant passages even when different terminology is used.

This allows you to work with document collections far larger than any LLM’s context window while maintaining traceability to source material.

7.3.3 Practical NotebookLM Workflow

Step 1: Document Collection

Upload your research materials:

- Academic papers

- Policy reports

- Government documents

- News articles

- Your own notes and drafts

Step 2: Initial Exploration

Start with broad questions to understand your collection:

- “What are the main themes across these documents?”

- “Where do these authors disagree on key issues?”

- “What methodologies are being used to study this topic?”

Step 3: Focused Analysis

Dive deeper into specific aspects:

- “How do different authors define [key concept]?”

- “What evidence is presented for [specific claim]?”

- “Which documents discuss [specific methodology]?”

Step 4: Pattern Recognition

Look for connections and gaps:

- “Are there any contradictions in findings across studies?”

- “What research questions remain unanswered?”

- “How has thinking on this topic evolved over time?”

Step 5: Verification

Always click through citations to verify context and accuracy.

7.3.4 Real-World Example: Chinese Development Finance

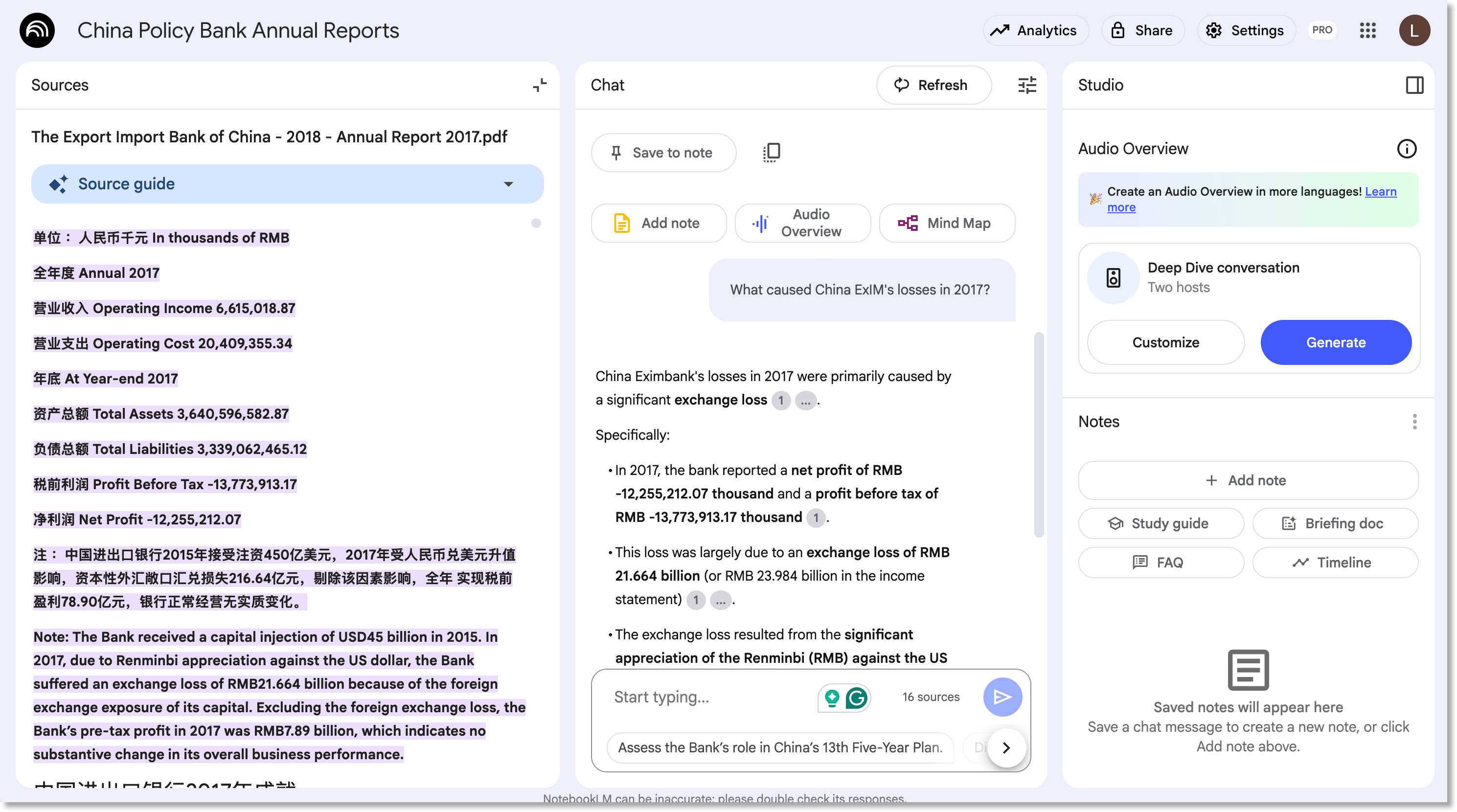

In our recent ODI research, we were trying to understand a shift in China’s overseas lending from policy banks to state-owned commercial banks. We analyzed the financial statements of the policy banks and wanted to complement this quantitative analysis with their own explanations via annual reports.

Here’s what we uploaded to NotebookLM:

- 10 years of China Development Bank and Export-Import Bank of China annual reports (containing text in both English and Mandarin - while we assume these are good translations, there might be subtle differences that are interesting)

Our approach: We used NotebookLM for exploratory analysis rather than relying on its outputs directly. When our financial analysis revealed losses at China ExIM in 2017, we could easily ask how this was explained in the annual reports. We explored how both banks discussed key topics like green lending, risk management, and overseas lending losses.

Key advantages:

- Faster than manual search: Dramatically quicker than “Control + F” searching across documents

- Semantic understanding: Vector search finds meaning, not just exact phrases

- Cross-institutional analysis: Having reports from both institutions together helped us distinguish between institution-specific issues and broader policy changes

- Multilingual capability: The system could search in both English and Mandarin (my co-author speaks Mandarin, I do not)

Our workflow:

- Upload all annual reports to NotebookLM

- Ask exploratory questions about patterns and changes over time

- Use NotebookLM results to identify which reports and sections to examine closely

- Verify findings by reading the original source material

- Incorporate insights into our broader financial analysis

Results: NotebookLM pointed us toward the right primary sources rather than providing our final analysis. This saved enormous time while maintaining research integrity through verification against original documents.

7.3.5 Best Practices for NotebookLM

Document Preparation:

- Use clear, descriptive file names

- Include document metadata (author, date, type)

- Consider organizing by theme or time period

- Remove documents that aren’t directly relevant

Questioning Strategy:

- Start broad, then narrow down

- Ask follow-up questions based on initial results

- Use specific terminology from your field

- Ask for comparisons and contrasts

Citation Verification:

- Always click through citations to verify context

- Check that quotes aren’t taken out of context

- Verify quantitative claims against source documents

- Be especially careful with technical or nuanced arguments

7.4 Deep Research Tools: AI-Powered Research Assistants

7.4.1 What Are Deep Research Tools?

Deep Research tools are AI systems that can conduct comprehensive research projects autonomously. You ask a question, and they spend 15-20 minutes researching the topic, then provide detailed reports that can be 15-30 pages long.

Deep Research tools represent one of the first major successes in “agentic workflows” - AI systems that can pursue goals autonomously rather than just responding to single queries.

What makes something “agentic”:

- Goal-oriented: Given a research question, the system formulates its own plan

- Autonomous execution: It conducts multiple searches and analysis steps without human guidance

- Adaptive behavior: It adjusts its approach based on what it finds

- Tool use: It can access external resources and synthesize information from multiple sources

Why this matters: Traditional chatbots respond to individual prompts. Agents can tackle complex, multi-step projects that previously required human coordination and decision-making. Deep Research is one of the clearest examples of this capability working at a practical level.

The technology is evolving rapidly. As Ethan Mollick explains in “The End of Search, The Beginning of Research”, we’re seeing the convergence of “Reasoners” (AI that can think through problems step-by-step) and “Agents” (AI that can take autonomous action). This combination is creating new possibilities for research assistance.

Available Tools:

- ChatGPT Deep Research (OpenAI) - Currently produces the most sophisticated analysis

- Claude Research (Anthropic) - Newer tool with shorter outputs, still developing

- Gemini Deep Research (Google) - Very long and detailed outputs, sometimes overwhelming

- Perplexity Pro - Uses DeepSeek’s R1 model, good middle ground between depth and accessibility

Note: This landscape is changing rapidly. Tool capabilities and availability shift frequently.

7.4.2 How Deep Research Works

Step 1: Query Clarification The system often asks clarifying questions to understand what you’re really looking for.

Step 2: Research Planning It creates a research plan with specific subtopics to investigate.

Step 3: Systematic Search It conducts multiple searches across different sources and angles.

Step 4: Synthesis It analyzes findings and creates a comprehensive report.

Step 5: Fact-Checking It attempts to verify claims across multiple sources.

7.4.3 Practical Example: Research Query

Question: “What are the main barriers to renewable energy adoption in Sub-Saharan Africa, and what policy interventions have shown promise?”

Deep Research Process:

- Clarification: “Should I focus on specific technologies or countries?”

- Research Plan: Infrastructure, financing, policy frameworks, case studies

- Systematic Search: Academic literature, policy reports, development bank analyses

- Synthesis: 20-page report with executive summary, detailed analysis, and recommendations

Typical Output Quality:

- Comprehensive coverage of major issues

- Multiple perspectives and sources

- Quantitative data where available

7.4.4 Limitations and Caveats

Data Access Limitations:

- Primarily accesses publicly available information

- Limited access to subscription academic databases

- May miss recent developments or specialized sources

- Regional bias toward English-language sources

Quality Variations:

- Can be confidently wrong about specific facts

- May oversimplify complex issues

- Sometimes misses important nuances

- Can struggle with rapidly evolving topics

Licensing Issues:

- Unclear what content deals exist with publishers

- This space is changing rapidly

- Academic database access remains limited

- Always verify claims against authoritative sources

7.4.5 My Deep Research Workflow

Step 1: Start with Known Topics Test the system’s performance on topics where you have expertise to calibrate the “jagged frontier.”

Step 2: Use for Exploration Let it identify areas and sources you might not have considered.

Step 3: Verify Key Claims Fact-check important assertions, especially quantitative ones.

Step 4: Follow Up on Sources Use the research as a roadmap to find primary sources.

Step 5: Integration Combine AI research with your domain expertise and additional sources.

7.4.6 Realistic Expectations

What I’ve Found Impressive:

- Comprehensive coverage of complex topics

- Synthesis across multiple sources and perspectives

- Identification of contradictions and debates

- Quality equivalent to what I’d expect from a skilled research assistant after a week of work

What I’ve Found Concerning:

- Occasional confident assertions of false “facts”

- Sometimes misses crucial recent developments

- Can perpetuate biases present in training data

- May oversimplify politically sensitive issues

7.4.7 Beyond Academic Research

Deep Research tools aren’t just for scholarly work. I’ve found them helpful for many aspects of daily life that previously would have required a capable personal assistant or “chief of staff”:

- Meeting preparation: Understanding the backgrounds and expertise of people I’ll be meeting with (I used it to better understand the participants in this webinar!)

- Trip planning: Both professional and personal travel, from finding child-friendly attractions along road trip routes to understanding local customs and logistics

- Practical problem-solving: From figuring out how to stop woodpeckers from damaging my house to researching complex household decisions

- Professional briefings: Getting up to speed on new sectors, regulations, or policy developments before important conversations

This represents a democratization of research capability that was previously available only to those with dedicated staff support.

7.5 Integrating Advanced Tools into Your Workflow

7.5.1 The Strategic Approach

Use NotebookLM when:

- You have a collection of relevant documents

- You need to find patterns across multiple sources

- You want to verify claims against specific texts

- You’re doing literature synthesis or comparative analysis

Use Deep Research when:

- You’re starting research on a new topic

- You need broad coverage of an issue

- You want to identify key debates and perspectives

- You’re looking for policy examples or case studies

Always Remember:

- These are tools for widening your net, not replacing judgment

- Verify important claims against authoritative sources

- Use your domain expertise to assess quality and relevance

- Combine AI research with traditional scholarly methods

7.5.2 Building Your Advanced Research Workflow

Phase 1: Exploration

- Use Deep Research for initial topic mapping

- Upload key documents to NotebookLM

- Identify major themes and debates

Phase 2: Analysis

- Use NotebookLM to analyze patterns across documents

- Verify key claims from Deep Research

- Identify gaps and contradictions

Phase 3: Synthesis

- Combine AI insights with your domain knowledge

- Conduct focused searches for missing perspectives

- Create your own analytical framework

Phase 4: Verification

- Fact-check quantitative claims

- Verify citations and sources

- Ensure balanced representation of viewpoints

7.6 Hands-On Activity: Advanced Research Project

Let’s practice using these tools together:

Step 1: Choose Your Research Question Select a topic you’re curious about but don’t know deeply.

Step 2: Deep Research Phase Use a Deep Research tool to get broad coverage of your topic.

Step 3: Document Collection Based on the Deep Research output, find 5-10 relevant documents to upload to NotebookLM.

Step 4: Focused Analysis Use NotebookLM to dig deeper into specific aspects highlighted in the Deep Research.

Step 5: Critical Assessment Evaluate the quality and consistency of insights across both tools.

7.7 What You Can Do Now

- Test NotebookLM with a small collection of documents from your current research

- Try Deep Research on a topic you know well to calibrate its performance

- Develop your verification workflow for checking AI-generated claims

- Identify your research domains where these tools could be most valuable

- Create your advanced research protocol combining both tools strategically

7.8 The Research Frontier

These tools represent the current frontier of AI-assisted research. They’re not perfect, but they’re powerful enough to transform what’s possible for individual researchers and small teams.

The key is approaching them strategically:

- Use them to expand your scope, not replace your judgment

- Verify important claims through traditional scholarly methods

- Combine AI insights with your domain expertise

- Stay critical and maintain academic standards

As these tools continue to evolve, researchers who learn to use them effectively will have significant advantages in conducting comprehensive, policy-relevant research.

Up next: Coding Assistance and Data Analysis